Recursive Neural Networks (RecNNs)

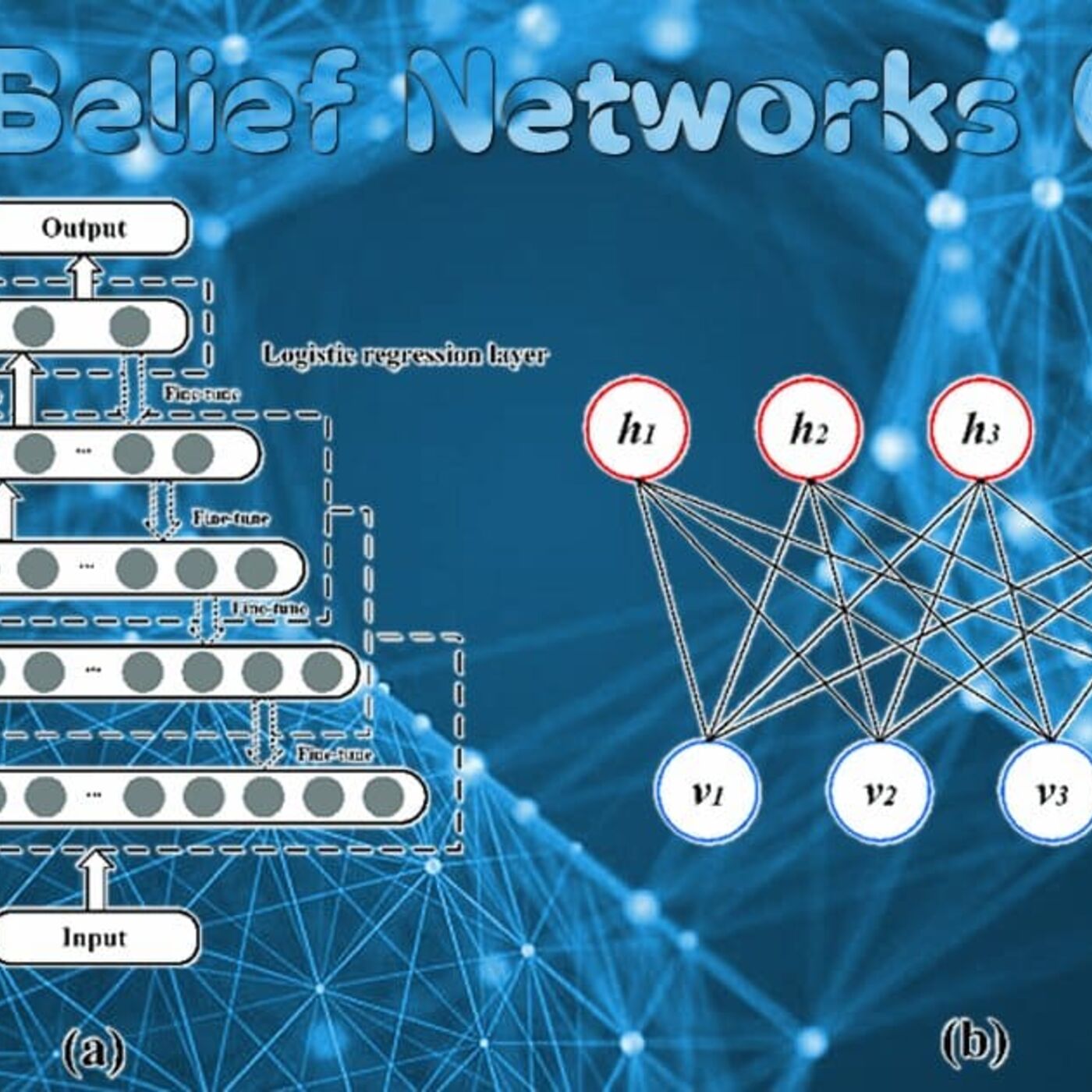

In the multifaceted arena of neural network architectures, Recursive Neural Networks (RecNNs) introduce a unique twist, capturing data's inherent hierarchical structure. Distinct from the more widely known Recurrent Neural Networks, which focus on sequences, RecNNs excel in processing tree-like structures, making them especially potent for tasks like syntactic parsing and sentiment analysis.1. Unveiling Hierarchies in DataThe core trait of RecNNs is their ability to process data hierarchically. Instead of working in a linear or sequential fashion, RecNNs embrace tree structures, making them particularly apt for data that can be represented in such a form. In doing so, they unravel patterns and relationships that might remain concealed in traditional architectures.2. Natural Language Processing and BeyondOne of the most prominent applications of RecNNs is in the realm of Natural Language Processing (NLP). Languages, by their very nature, have hierarchical structures, with sentences composed of clauses and phrases, which are further broken down into words. RecNNs have been employed for tasks like syntactic parsing, where sentences are decomposed into their grammatical constituents, and sentiment analysis, where the sentiment of phrases can influence the sentiment of the whole sentence.3. A Different Approach to WeightsUnlike conventional neural networks that use shared weights across layers, RecNNs typically utilize weights based on the data's hierarchy. This flexibility enables them to adapt and scale based on the complexity and depth of the tree structures they're processing.4. Challenges and EvolutionWhile RecNNs offer a unique lens to view and process data, they come with challenges. Training can be computationally intensive due to the variable structure of trees. Moreover, capturing long-range dependencies in very deep trees can be challenging. However, innovations and hybrid models have emerged, blending the strengths of RecNNs with other architectures to address some of these concerns.5. A Niche but Potent ToolRecNNs might not boast the widespread recognition of some of their counterparts, but in tasks where hierarchy matters, they are unparalleled. Their unique design underscores the richness of neural network models and reaffirms that different problems often demand specialized solutions.In summation, Recursive Neural Networks illuminate the rich tapestry of hierarchical data, diving deep into structures that other models might gloss over. As we continue to unravel the complexities of data and strive for more nuanced understandings, architectures like RecNNs serve as potent reminders of the depth and diversity in the tools at our disposal.<br/><br/>Kind regards by J.O. Schneppat & GPT 5